Table of Contents

As a business coach at Unknown University of Applied Sciences, Jeroen Coelen guides students along in their start-up journey, as he provides coaching opportunities where students can expect honest feedback, inspiration, and relevant advice. Jeroen uses his expertise in navigating the early stages of start-ups in order to inspire students to begin new ventures with confidence. With his blogs, Jeroen offers practical tips on developing problem-fit solutions, suggests ways to better understand your customers, and recommends frameworks that help make sense of the chaos of start-ups. We are lucky to host Jeroen’s blogs, as they provide unique insights regarding the nature of the start-up process, continue reading below.

n this edition:

- Why Build-Measure-Learn doesn’t always work

- How experiment cycles frontload learning

- Bonus: Job to be Done lecture

Forget about Build-Measure-Learn

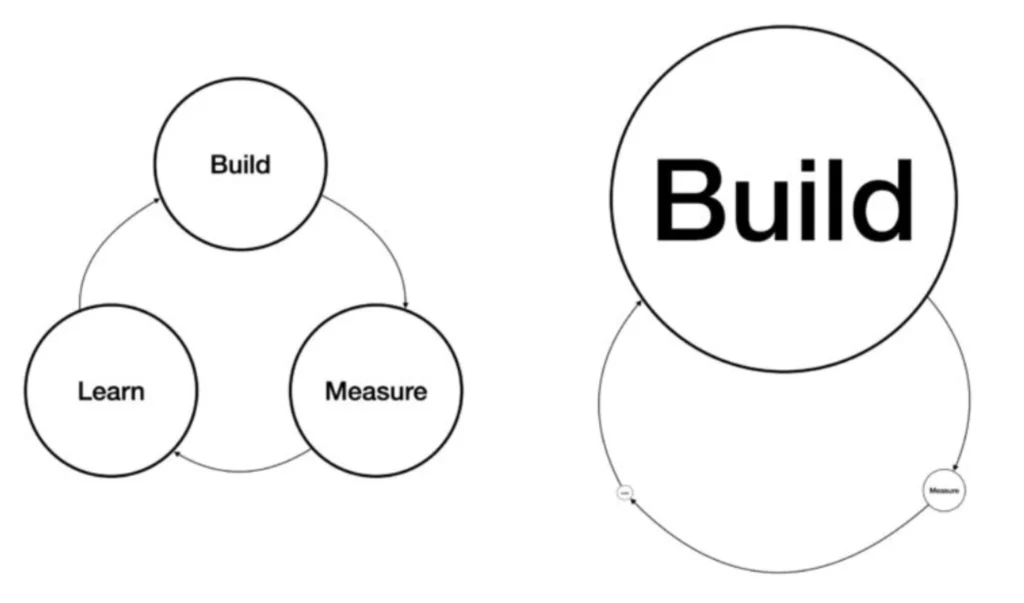

Build-Learn-Learn (BML) sounds good on paper, but in practice often it ends up lopsided (see image below).

I often see founders spending the majority of their time building, much less on measuring and ignoring learning. Oh no, why would that be a bad thing?

Well for starters, spending too much time building gets you in the ‘false-sense-of-progress’-trap. When you are building, there is nothing saying: this sucks. (I’m excluding bugs on faulty code)

Besides buggy code, you are the only feedback loop and it’s tempting to be gentle with yourself.

The risks of pre-product-market-fit startups often are not about technical feasibility, while ‘building’ mostly mitigates uncertainty of the feasibility aspect of a startup idea.

Focus on learning instead of building

BML doesn’t include an explicit step that highlights: “What should we learn next?” Even though Ries talks about risky assumptions, the build-measure-learn loop is not explicitly linked to them.

In practice, this can result in unguided iterations towards nowhere, while risky assumptions are a great vehicle for focused learning.

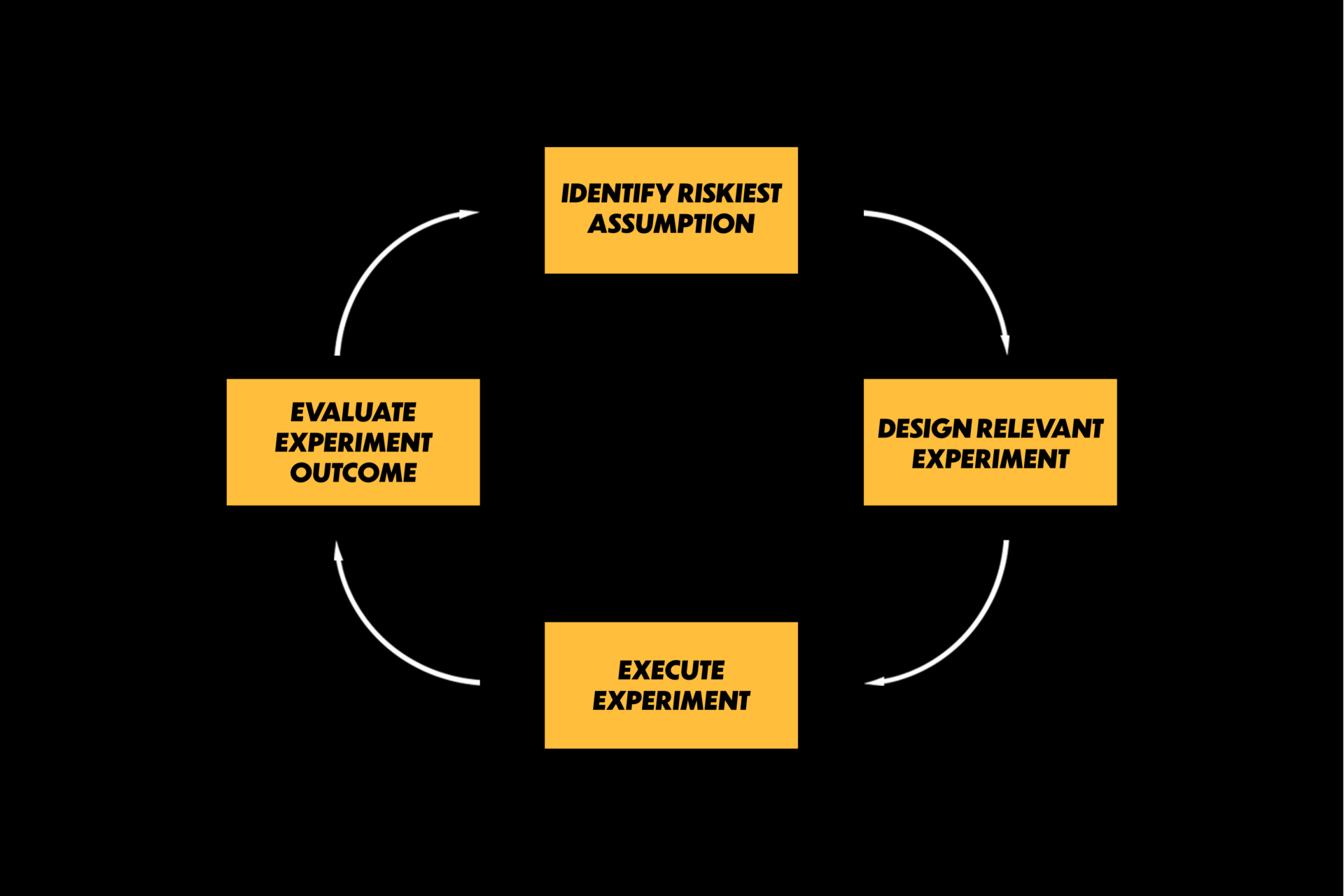

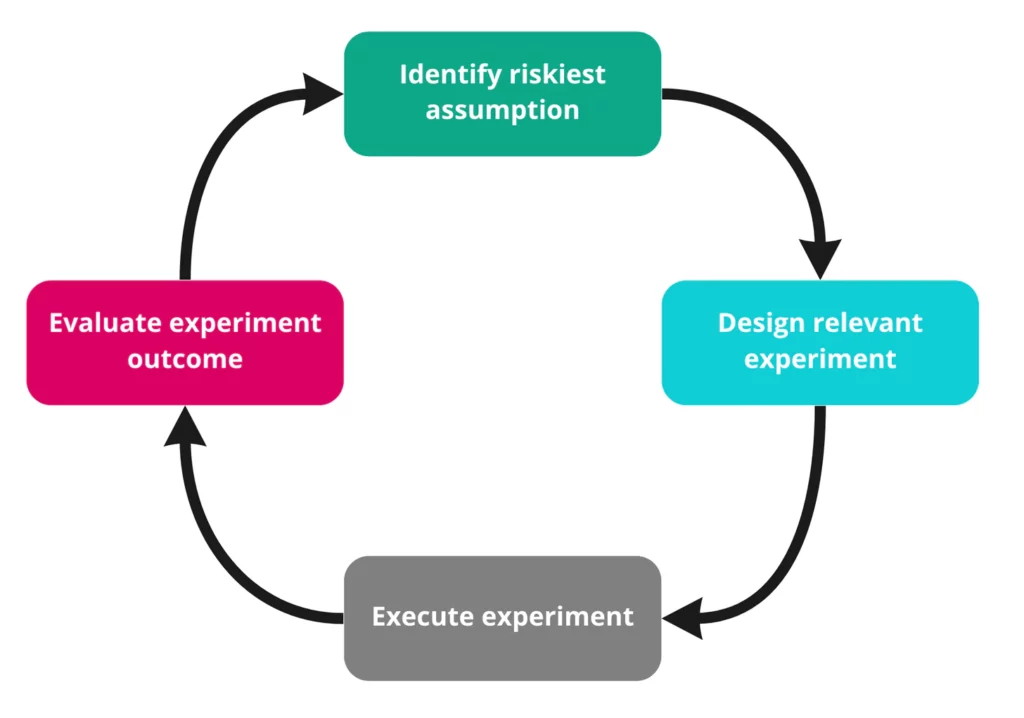

Using the experiment cycle, your focus will shift from building to learning. I hate acronyms, so I’m not going to call it the IDEE cycle, but you can, I’ll allow it.

When to start using experiment cycles?

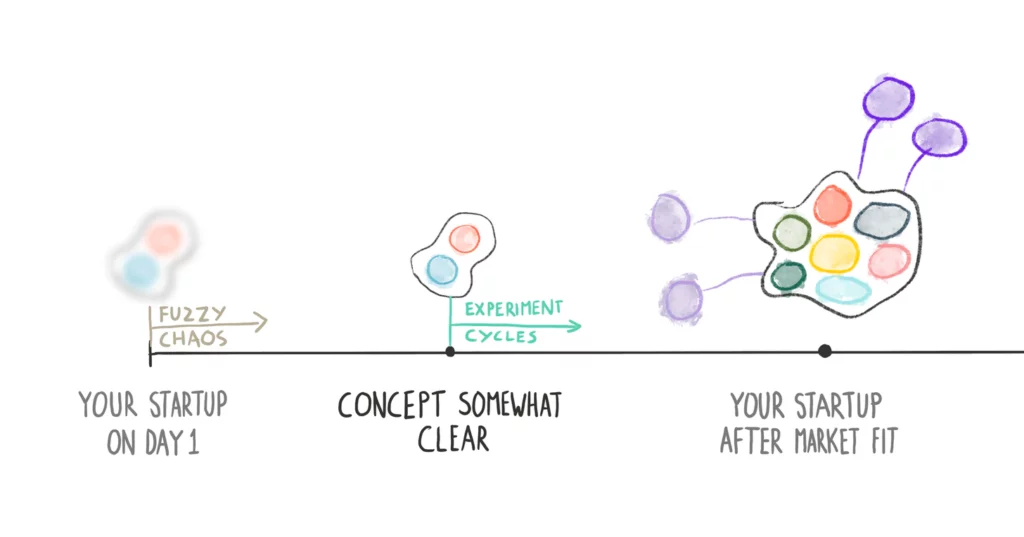

You can’t formulate risky assumptions about an unclear concept, I write about this extensively here. The only risky assumptions in the ‘fuzzy chaos’ stage are either ‘is there a problem to solve?’ or ‘can we add something to create value?’

These risky assumptions are so broad that, in my experience, just talking to a lot of customers and stakeholders helps you best navigate that fuzzy chaos. At some point, you will experience that you can start thinking about concrete value propositions. That’s your cue.

A quick rundown of the experiment cycle

1. Select risky assumption

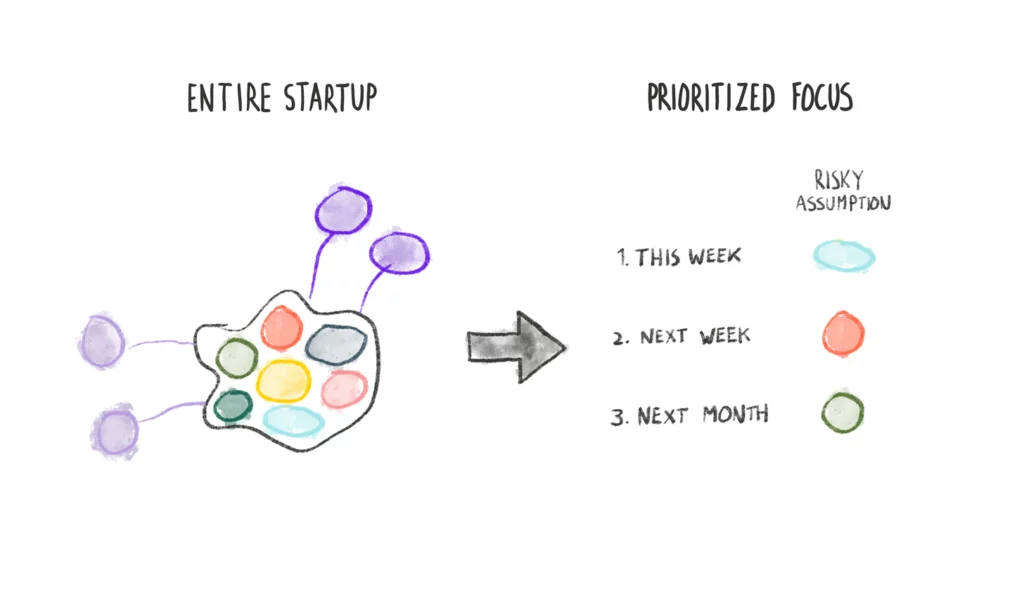

Take a look at your startup concept. You can do this via many lenses, such as the BM-toolkit, Business Model Canvas, Desirability-Viability-Feasibility, Lean Canvas. All these aim to highlight structural flaws in your business. Each building block is an assumption.

Risky assumption: Statement that needs to be true for your startup to be successful for which you lack evidence to check if they are true

In my experience, 9 out of 10 times the riskiest assumptions in an early stage startup hovers around desirability. That’s why I hate the focus of building extensive prototypes.

Tip: Don’t be gentle, be honest. If you have a bicycle subscription idea, don’t say a risky assumption is “People use their bicycle in their lives to get around” if you live in the Netherlands. Obviously, that needs to be true, but it’s true if you just look out of your window once. No experiment needed. “People are willing to pay a subscription for a bicycle” was much riskier for Swapfiets’ early days.

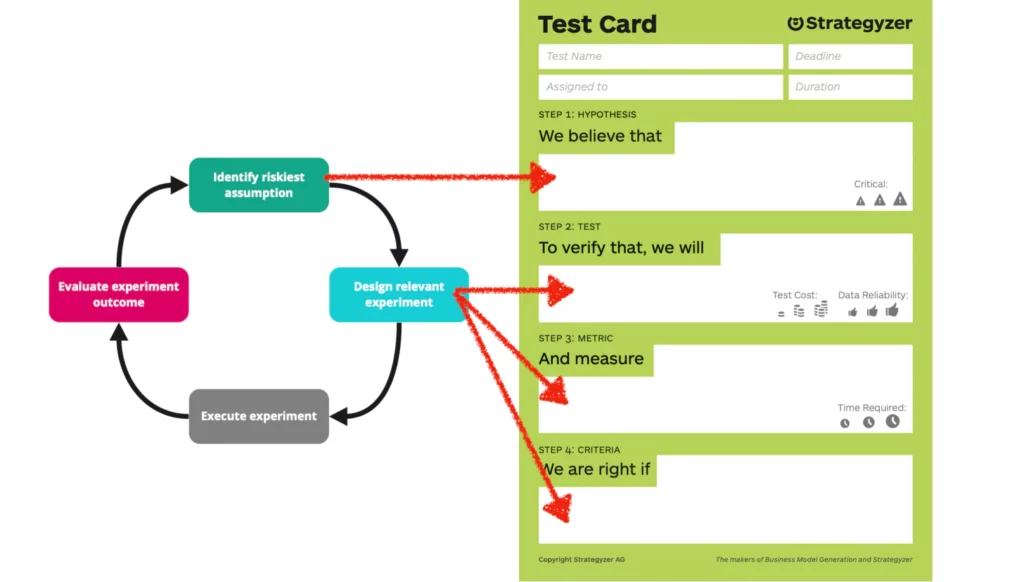

2. Design experiment

Figure out which experiment gets data that helps you reduce the risk in your risky assumption.

There are many experiments for inspiration look into the book ‘Testing Business Ideas’. Selecting the right experiment for the risky assumption sometimes can be tricky. This is an intuitive muscle to train.

Use an ‘test card’ (PDF) to capture your experiment setup. They are very short and super handy to make sure you covered all boxes.

Tips on measures

“Not everything that counts can be counted, and not everything that can be counted counts.” – Cameron (1963)

Pay special attention to the measure. Quantified measures are overrated. Sometimes, you just need to observe stuff.

Don’t just measure scans on a QR code on a flyer when you can also observe the reaction of people while reading that flyer. Both are equally valuable.

3. Execute experiment

Do your thing. You might fail. That’s okay.

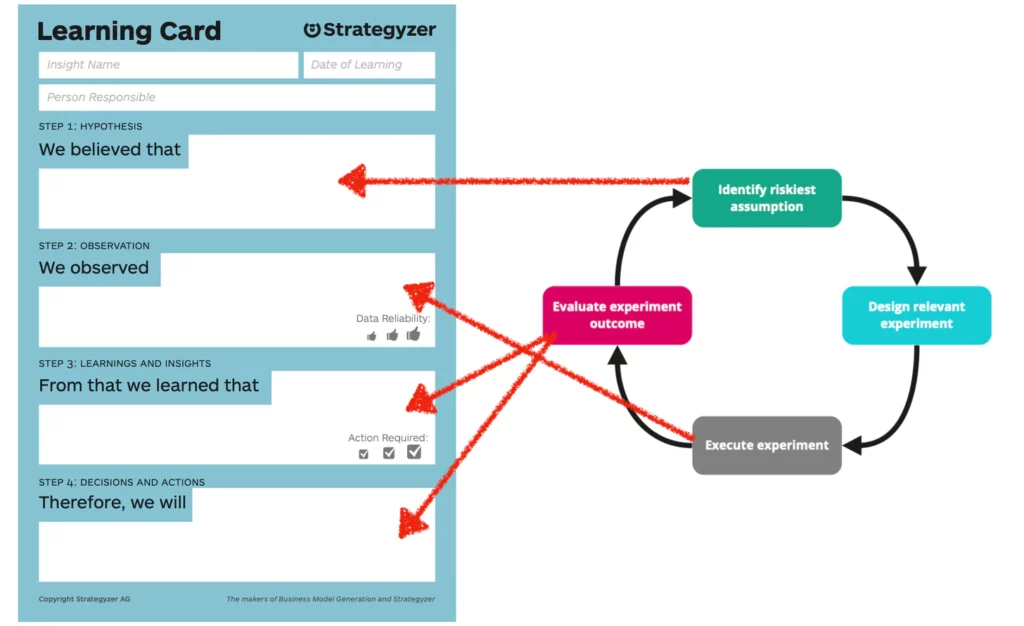

4. Evaluate experiment

Use a learning card (PDF) to process your results. Discuss with your team. How should we interpret the data? What are the implications of this result?

“We are right if” is not a holy grail

Don’t take your ‘we-are-right-if’-point of your test card too seriously. That cutoff point is just a probe for reflection for yourself.

A food startup I coached had an experiment where they wanted to increase sales via Instagram. Their ‘we are right if’: sell 25 meals in 1 day. However, they ran into a production limit of 16. That means: They sold out, for the first time ever.

They didn’t meet their goal. Is that not a very successful experiment, to sell out? Now they learned about their production capacities. The next experiment focused on expanding those capacities.

Bonus: Deep dive into Job to be done

Have you seen Christensen’s Milkshake video? Do you want to learn more about jobs to be done? In this lecture, I explain job hierarchies and how to find jobs to be done in your data.